Naive Dropout in RNN: Boosting Performance

Understanding Naive Dropout in RNN

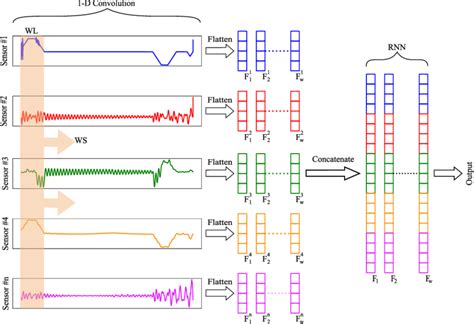

Recurrent Neural Networks (RNNs) are a type of neural network designed to handle sequential data, such as time series data, natural language processing, and speech recognition. However, RNNs are prone to overfitting, especially when dealing with complex and large datasets. One of the techniques to prevent overfitting in RNNs is Naive Dropout.

Naive Dropout is a simplified version of the Dropout technique, which randomly drops out neurons during training to prevent overfitting. In this blog post, we will delve into the concept of Naive Dropout in RNN, its advantages, and how it can be implemented in R.

What is Naive Dropout?

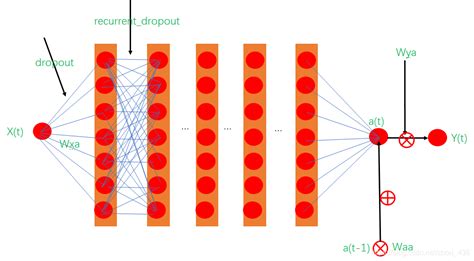

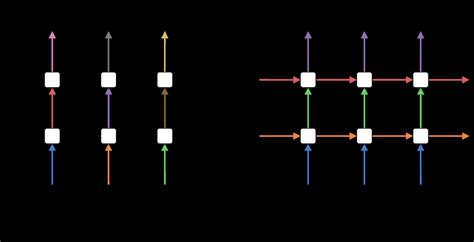

Naive Dropout is a technique used to prevent overfitting in RNNs by randomly dropping out neurons during training. The main idea behind Naive Dropout is to randomly set a fraction rate of neurons to zero during training, which helps to prevent the network from relying too heavily on any single neuron.

In traditional Dropout, the dropout rate is applied to each neuron independently, which can lead to a loss of information. Naive Dropout, on the other hand, applies the dropout rate to the entire network, which helps to preserve the information.

How Does Naive Dropout Work?

Naive Dropout works by randomly setting a fraction rate of neurons to zero during training. The dropout rate is a hyperparameter that controls the proportion of neurons that are dropped out.

Here’s a step-by-step explanation of how Naive Dropout works:

- Forward Pass: During the forward pass, the input is passed through the network, and the output is calculated.

- Dropout: During the dropout phase, a random binary mask is generated, where each element represents a neuron. The mask is used to set a fraction rate of neurons to zero.

- Backward Pass: During the backward pass, the error is calculated, and the weights are updated based on the output and the error.

- Repeat: Steps 1-3 are repeated for each iteration of the training process.

Advantages of Naive Dropout

Naive Dropout has several advantages, including:

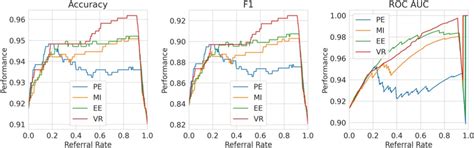

- Prevents Overfitting: Naive Dropout helps to prevent overfitting by randomly dropping out neurons during training.

- Improves Generalization: Naive Dropout helps to improve the generalization of the network by preventing the network from relying too heavily on any single neuron.

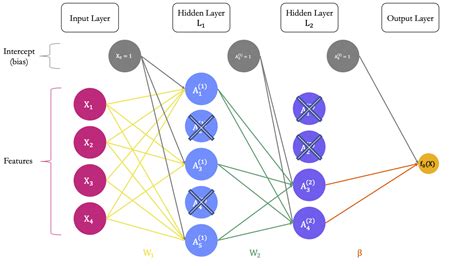

- Easy to Implement: Naive Dropout is easy to implement and can be applied to any RNN architecture.

Implementing Naive Dropout in R

Naive Dropout can be implemented in R using the Keras package. Here’s an example of how to implement Naive Dropout in R:

library(keras)

# Create a simple RNN model

model <- keras_model_sequential() %>%

layer_lstm(units = 50, return_sequences = TRUE) %>%

layer_dropout(rate = 0.2) %>%

layer_dense(units = 1)

# Compile the model

model %>% compile(

loss = "mean_squared_error",

optimizer = optimizer_rmsprop(),

metrics = c("accuracy")

)

# Train the model

model %>% fit(

x_train,

y_train,

epochs = 100,

batch_size = 32,

validation_data = list(x_test, y_test)

)

In this example, we create a simple RNN model using the layer_lstm function and add a dropout layer using the layer_dropout function. We then compile the model and train it using the fit function.

📝 Note: The dropout rate is a hyperparameter that needs to be tuned for each specific problem. A dropout rate of 0.2 is used in this example, but you may need to adjust this value depending on your specific problem.

Conclusion

Naive Dropout is a simple yet effective technique for preventing overfitting in RNNs. By randomly dropping out neurons during training, Naive Dropout helps to improve the generalization of the network and prevent overfitting. Implementing Naive Dropout in R is easy and can be done using the Keras package.

What is the main advantage of Naive Dropout?

+

The main advantage of Naive Dropout is that it helps to prevent overfitting in RNNs by randomly dropping out neurons during training.

How does Naive Dropout work?

+

Naive Dropout works by randomly setting a fraction rate of neurons to zero during training. The dropout rate is a hyperparameter that controls the proportion of neurons that are dropped out.

How do I implement Naive Dropout in R?

+

Naive Dropout can be implemented in R using the Keras package. You can add a dropout layer to your RNN model using the layer_dropout function.